Have you ever felt uncertain about how clothes will actually fit you when shopping online? Traditional clothing shopping methods make us wonder about the right size and style. Now, the emergence of virtual try-on technology provides a new solution to this problem.

Have you ever felt uncertain about how clothes will actually fit you when shopping online?

Traditional clothing shopping methods make us wonder about the right size and style. Now, the emergence of virtual try-on technology provides a new solution to this problem.

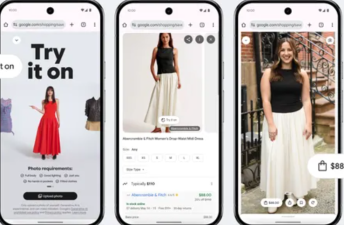

Through high-fidelity and detail-preserving algorithms, it brings a virtual try-on experience in a real environment with leading visual effects.

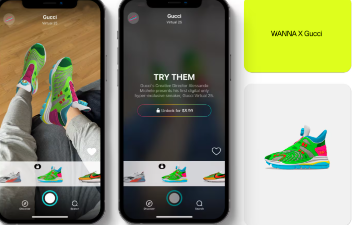

This technology is particularly suitable for e-commerce and fashion retail industries, providing users with realistic try-on effects and improving the shopping experience.

Features

Virtual try-on image generation: Combine user images with clothing images to generate virtual images of users wearing specific clothing.

Clothing detail preservation: Through GarmentNet technology, ensure that details such as clothing patterns and textures are accurately reflected in virtual images.

Support text prompt understanding: Understand and present high-level semantic information of clothing through visual encoders and text prompts.

Personalization: Users can upload their own images and clothing pictures to generate try-on effects that are more in line with their personal characteristics.

Realistic try-on effects: Generate visually natural and realistic try-on images, and the clothing can accurately adapt to the posture and body shape of the person.

Technical principle

Key components:

Visual encoder: extracts high-level semantic information of clothing images, enabling the model to understand clothing styles, types, etc.

GarmentNet: a parallel UNet network responsible for capturing low-level detail features of clothing, such as textures, patterns, etc., to ensure accurate details in the generated image.

Text prompt: enhances the model's understanding of clothing features and supports users to describe the details of specific clothing through text.

Model optimization:

The diffusion model has been improved to make the generated image of people wearing more realistic, while optimizing the use of video memory to support running on MAC systems equipped with Apple Silicon.

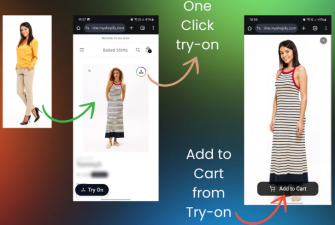

How to use

IDM-VTON is also very simple to use, providing an online experience with a hug face.

Just follow the steps below:

①Upload a photo to change clothes, use the automatically generated mask tool, and keep the default settings.

②Apply automatic cropping and resizing functions.

③Upload clothing pictures and add clothing descriptions (optional)

④Click the Generate button and wait for AI to complete the clothing change.

Application scenarios

E-commerce: Provide users with a preview of how clothes will look when worn on them, improve shopping experience and satisfaction, and reduce returns.

Fashion retail: Fashion brands can use IDM-VTON to enhance customers' personalized experience, showcase the latest styles through virtual try-ons, attract customers and promote sales.

Personalized recommendations: Combine users' body shape and preference data to recommend clothes that suit their style and body shape.

Social media: Users can try different clothing styles, share their try-on effects, and increase social interaction and entertainment.

Fashion design and display: Designers can use IDM-VTON to showcase their designs and display clothes through virtual models without making physical samples.